【DL輪読会】"Instant Neural Graphics Primitives with a Multiresolution Hash Encoding"

Download as PPTX, PDF0 likes1,617 views

2022/05/20 Deep Learning JP: http://deeplearning.jp/seminar-2/

1 of 12

Downloaded 36 times

![DEEP LEARNING JP

[DL Papers]

論文紹介:

Instant Neural Graphics Primitives with a Multiresolution

Hash Encoding

Ryosuke Ohashi, bestat inc.

http://deeplearning.jp/](https://image.slidesharecdn.com/20220520ohashi-220525043909-e8a2e7f7/85/DL-Instant-Neural-Graphics-Primitives-with-a-Multiresolution-Hash-Encoding-1-320.jpg)

Recommended

【DL輪読会】ViTPose: Simple Vision Transformer Baselines for Human Pose Estimation

【DL輪読会】ViTPose: Simple Vision Transformer Baselines for Human Pose EstimationDeep Learning JP

?

2022/11/18

Deep Learning JP

http://deeplearning.jp/seminar-2/全力解説!罢谤补苍蝉蹿辞谤尘别谤

全力解説!罢谤补苍蝉蹿辞谤尘别谤Arithmer Inc.

?

本スライドは、弊社の梅本により弊社内の技術勉強会で使用されたものです。

近年注目を集めるアーキテクチャーである「Transformer」の解説スライドとなっております。

"Arithmer Seminar" is weekly held, where professionals from within and outside our company give lectures on their respective expertise.

The slides are made by the lecturer from outside our company, and shared here with his/her permission.

Arithmer株式会社は東京大学大学院数理科学研究科発の数学の会社です。私達は現代数学を応用して、様々な分野のソリューションに、新しい高度AIシステムを導入しています。AIをいかに上手に使って仕事を効率化するか、そして人々の役に立つ結果を生み出すのか、それを考えるのが私たちの仕事です。

Arithmer began at the University of Tokyo Graduate School of Mathematical Sciences. Today, our research of modern mathematics and AI systems has the capability of providing solutions when dealing with tough complex issues. At Arithmer we believe it is our job to realize the functions of AI through improving work efficiency and producing more useful results for society. SSII2022 [SS1] ニューラル3D表現の最新動向? ニューラルネットでなんでも表せる?? ??![SSII2022 [SS1] ニューラル3D表現の最新動向? ニューラルネットでなんでも表せる?? ??](https://cdn.slidesharecdn.com/ss_thumbnails/ss1ssii2022hkatoneural3drepresentationhiroharukato-220607054619-fadc6480-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS1] ニューラル3D表現の最新動向? ニューラルネットでなんでも表せる?? ??](https://cdn.slidesharecdn.com/ss_thumbnails/ss1ssii2022hkatoneural3drepresentationhiroharukato-220607054619-fadc6480-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS1] ニューラル3D表現の最新動向? ニューラルネットでなんでも表せる?? ??](https://cdn.slidesharecdn.com/ss_thumbnails/ss1ssii2022hkatoneural3drepresentationhiroharukato-220607054619-fadc6480-thumbnail.jpg?width=560&fit=bounds)

![SSII2022 [SS1] ニューラル3D表現の最新動向? ニューラルネットでなんでも表せる?? ??](https://cdn.slidesharecdn.com/ss_thumbnails/ss1ssii2022hkatoneural3drepresentationhiroharukato-220607054619-fadc6480-thumbnail.jpg?width=560&fit=bounds)

SSII2022 [SS1] ニューラル3D表現の最新動向? ニューラルネットでなんでも表せる?? ??SSII

?

6/9 (木) 14:15~14:45 メイン会場

講師:加藤 大晴 氏(株式会社Preferred Networks)?

概要: 点群/メッシュ/ボクセルなどの従来的な3D表現に代わってニューラルネットによる陰関数で三次元データを表してしまう手法が近年登場し、様々なタスクで目覚ましい成果を挙げ大きな注目を集めている。この表現は、計算コストが高い代わりに柔軟性が高くさまざまなものをシンプルかつコンパクトに表現できることが知られている。本講演では、その基礎、応用、最先端の状況について、代表的な研究を広く紹介する。[DL輪読会]Vision Transformer with Deformable Attention (Deformable Attention Tra...![[DL輪読会]Vision Transformer with Deformable Attention (Deformable Attention Tra...](https://cdn.slidesharecdn.com/ss_thumbnails/dl0114-220114032933-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Vision Transformer with Deformable Attention (Deformable Attention Tra...](https://cdn.slidesharecdn.com/ss_thumbnails/dl0114-220114032933-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Vision Transformer with Deformable Attention (Deformable Attention Tra...](https://cdn.slidesharecdn.com/ss_thumbnails/dl0114-220114032933-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Vision Transformer with Deformable Attention (Deformable Attention Tra...](https://cdn.slidesharecdn.com/ss_thumbnails/dl0114-220114032933-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Vision Transformer with Deformable Attention (Deformable Attention Tra...Deep Learning JP

?

2022/01/14

Deep Learning JP:

http://deeplearning.jp/seminar-2/【メタサーベイ】基盤モデル / Foundation Models

【メタサーベイ】基盤モデル / Foundation Modelscvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/【DL輪読会】言語以外でのTransformerのまとめ (ViT, Perceiver, Frozen Pretrained Transformer etc)

【DL輪読会】言語以外でのTransformerのまとめ (ViT, Perceiver, Frozen Pretrained Transformer etc)Deep Learning JP

?

This document summarizes recent research on applying self-attention mechanisms from Transformers to domains other than language, such as computer vision. It discusses models that use self-attention for images, including ViT, DeiT, and T2T, which apply Transformers to divided image patches. It also covers more general attention modules like the Perceiver that aims to be domain-agnostic. Finally, it discusses work on transferring pretrained language Transformers to other modalities through frozen weights, showing they can function as universal computation engines.【メタサーベイ】Neural Fields

【メタサーベイ】Neural Fieldscvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/ 【顿尝轮読会】贬别虫笔濒补苍别と碍-笔濒补苍别蝉

【顿尝轮読会】贬别虫笔濒补苍别と碍-笔濒补苍别蝉Deep Learning JP

?

2023/2/17

Deep Learning JP

http://deeplearning.jp/seminar-2/[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View SynthesisKento Doi

?

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesisの解説

paper: https://arxiv.org/abs/2003.08934

project page: http://www.matthewtancik.com/nerf

video presentation: https://www.youtube.com/watch?v=JuH79E8rdKc[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View SynthesisDeep Learning JP

?

2020/03/27

Deep Learning JP:

http://deeplearning.jp/seminar-2/ 【DL輪読会】AuthenticAuthentic Volumetric Avatars from a Phone Scan

【DL輪読会】AuthenticAuthentic Volumetric Avatars from a Phone ScanDeep Learning JP

?

2022/06/17

Deep Learning JP

http://deeplearning.jp/seminar-2/SSII2019企画: 点群深層学習の研究動向

SSII2019企画: 点群深層学習の研究動向SSII

?

SSII2019 企画セッション「画像センシング技術の最先端」

6月12日(水) 11:20~12:05 (メインホール)

三次元点群とは、三次元形状をその表面上の各点座標値の集合によって表現するデータ形式です。各点は順不同、かつ点の密度や総数が一定とは限らないという三次元点群の性質から、画像での深層学習の知見を直接応用することは難しいとされてきました。本発表では、PointNet 以降急速に発展した点群深層学習に関する研究の流れをまとめるとともに、実アプリケーションへの応用につながるような研究事例の紹介を行います?。?[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...Deep Learning JP

?

2021/09/24

Deep Learning JP:

http://deeplearning.jp/seminar-2/[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual VideosDeep Learning JP

?

2022/02/25

Deep Learning JP:

http://deeplearning.jp/seminar-2/【DL輪読会】NeRF-VAE: A Geometry Aware 3D Scene Generative Model

【DL輪読会】NeRF-VAE: A Geometry Aware 3D Scene Generative ModelDeep Learning JP

?

NeRF-VAE is a 3D scene generative model that combines Neural Radiance Fields (NeRF) and Generative Query Networks (GQN) with a variational autoencoder (VAE). It uses a NeRF decoder to generate novel views conditioned on a latent code. An encoder extracts latent codes from input views. During training, it maximizes the evidence lower bound to learn the latent space of scenes and allow for novel view synthesis. NeRF-VAE aims to generate photorealistic novel views of scenes by leveraging NeRF's view synthesis abilities within a generative model framework.[DL輪読会]MetaFormer is Actually What You Need for Vision![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]MetaFormer is Actually What You Need for VisionDeep Learning JP

?

2022/01/21

Deep Learning JP:

http://deeplearning.jp/seminar-2/畳み込みニューラルネットワークの高精度化と高速化

畳み込みニューラルネットワークの高精度化と高速化Yusuke Uchida

?

2012年の画像認識コンペティションILSVRCにおけるAlexNetの登場以降,画像認識においては畳み込みニューラルネットワーク (CNN) を用いることがデファクトスタンダードとなった.CNNは画像分類だけではなく,セグメンテーションや物体検出など様々なタスクを解くためのベースネットワークとしても広く利用されてきている.本講演では,AlexNet以降の代表的なCNNの変遷を振り返るとともに,近年提案されている様々なCNNの改良手法についてサーベイを行い,それらを幾つかのアプローチに分類し,解説する.更に,実用上重要な高速化手法について、畳み込みの分解や枝刈り等の分類を行い,それぞれ解説を行う.

Recent Advances in Convolutional Neural Networks and Accelerating DNNs

第21回ステアラボ人工知能セミナー講演資料

https://stair.connpass.com/event/126556/SSII2019TS: 実践カメラキャリブレーション ~カメラを用いた実世界計測の基礎と応用~

SSII2019TS: 実践カメラキャリブレーション ~カメラを用いた実世界計測の基礎と応用~SSII

?

SSII2019 チュートリアル講演

TS3 6月14日(金) 9:00~10:20 (メインホール)

カメラの幾何学的キャリブレーションはカメラを使った3次元計測?認識に欠かすことができない基礎技術です。?近年では LIDAR や ToF カメラのように深度情報を直接出力するカメラや、全周囲カメラのように透視投影ではないカメラをセンサとして使用することも一般的になりました。本講演ではこれらの幾何学的キャリブレーションについて、実例を交えながら基礎からご紹介します。さらに講演内容に対応した初学者向けのサンプルコードについても紹介します。深層学習によるHuman Pose Estimationの基礎

深層学習によるHuman Pose Estimationの基礎Takumi Ohkuma

?

2021年度の松尾研究室サマースクール画像認識コース第三回の姿勢推定(Human Pose Estimation)部分で用いた講義資料を公開する。

本資料は深層学習を用いたHuman Pose Estimation (HPE) の基礎的な内容を理解する助けとなる事を期待して作成し、HPEに関するタスクの種類 (2D, 3D, Single-person, Multi-person)、及びそれらに関する手法について学び、それぞれに対する利点?欠点?評価指標について説明する。【DL輪読会】A Path Towards Autonomous Machine Intelligence

【DL輪読会】A Path Towards Autonomous Machine IntelligenceDeep Learning JP

?

2022/7/29

Deep Learning JP

http://deeplearning.jp/seminar-2/画像生成?生成モデル メタサーベイ

画像生成?生成モデル メタサーベイcvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/ 2018/12/28 LiDARで取得した道路上点群に対するsemantic segmentation

2018/12/28 LiDARで取得した道路上点群に対するsemantic segmentationTakuya Minagawa

?

LiDARで取得した道路上の点群に対してSemantic Segmentationを行う手法についてサーベイしました。叁次元表现まとめ(深层学习を中心に)

叁次元表现まとめ(深层学习を中心に)Tomohiro Motoda

?

最近の叁次元表现やこれをニューラルネットワークのような深层学习モデルに入力,出力する际の工夫についてまとめています.気まぐれに一晩で作成したので,多少の粗さはお许しください.[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...Deep Learning JP

?

Neural Radiance Flow (NeRFlow) is a method that extends Neural Radiance Fields (NeRF) to model dynamic scenes from video data. NeRFlow simultaneously learns two fields - a radiance field to reconstruct images like NeRF, and a flow field to model how points in space move over time using optical flow. This allows it to generate novel views from a new time point. The model is trained end-to-end by minimizing losses for color reconstruction from volume rendering and optical flow reconstruction. However, the method requires training separate models for each scene and does not generalize to unknown scenes.点群深層学習 Meta-study

点群深層学習 Meta-studyNaoya Chiba

?

cvpaper.challenge2019のMeta Study Groupでの発表スライド

点群深層学習についてのサーベイ ( /naoyachiba18/ss-120302579 )を経た上でのMeta Study摆顿尝轮読会闭相互情报量最大化による表现学习

摆顿尝轮読会闭相互情报量最大化による表现学习Deep Learning JP

?

2019/09/13

Deep Learning JP:

http://deeplearning.jp/seminar-2/ Active Convolution, Deformable Convolution ―形状?スケールを学習可能なConvolution―

Active Convolution, Deformable Convolution ―形状?スケールを学習可能なConvolution―Yosuke Shinya

?

「夏のトップカンファレンス論文読み会」発表資料です。

Speaker Deck版: https://speakerdeck.com/shinya7y/active-convolution-deformable-convolution【DL輪読会】An Image is Worth One Word: Personalizing Text-to-Image Generation usi...

【DL輪読会】An Image is Worth One Word: Personalizing Text-to-Image Generation usi...Deep Learning JP

?

2022/8/19

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving Planners

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving PlannersDeep Learning JP

?

2023/8/25

Deep Learning JP

http://deeplearning.jp/seminar-2/More Related Content

What's hot (20)

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

![[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerf20200327slideshare-200326131430-thumbnail.jpg?width=560&fit=bounds)

[解説スライド] NeRF: Representing Scenes as Neural Radiance Fields for View SynthesisKento Doi

?

NeRF: Representing Scenes as Neural Radiance Fields for View Synthesisの解説

paper: https://arxiv.org/abs/2003.08934

project page: http://www.matthewtancik.com/nerf

video presentation: https://www.youtube.com/watch?v=JuH79E8rdKc[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis](https://cdn.slidesharecdn.com/ss_thumbnails/nerfdlseminar1-200327021512-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]NeRF: Representing Scenes as Neural Radiance Fields for View SynthesisDeep Learning JP

?

2020/03/27

Deep Learning JP:

http://deeplearning.jp/seminar-2/ 【DL輪読会】AuthenticAuthentic Volumetric Avatars from a Phone Scan

【DL輪読会】AuthenticAuthentic Volumetric Avatars from a Phone ScanDeep Learning JP

?

2022/06/17

Deep Learning JP

http://deeplearning.jp/seminar-2/SSII2019企画: 点群深層学習の研究動向

SSII2019企画: 点群深層学習の研究動向SSII

?

SSII2019 企画セッション「画像センシング技術の最先端」

6月12日(水) 11:20~12:05 (メインホール)

三次元点群とは、三次元形状をその表面上の各点座標値の集合によって表現するデータ形式です。各点は順不同、かつ点の密度や総数が一定とは限らないという三次元点群の性質から、画像での深層学習の知見を直接応用することは難しいとされてきました。本発表では、PointNet 以降急速に発展した点群深層学習に関する研究の流れをまとめるとともに、実アプリケーションへの応用につながるような研究事例の紹介を行います?。?[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...](https://cdn.slidesharecdn.com/ss_thumbnails/ahigher-dimensionalrepresentationfortopologicallyvaryingneuralradiancefields1-210924021911-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]A Higher-Dimensional Representation for Topologically Varying Neural R...Deep Learning JP

?

2021/09/24

Deep Learning JP:

http://deeplearning.jp/seminar-2/[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual Videos](https://cdn.slidesharecdn.com/ss_thumbnails/banmo-220225035310-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]BANMo: Building Animatable 3D Neural Models from Many Casual VideosDeep Learning JP

?

2022/02/25

Deep Learning JP:

http://deeplearning.jp/seminar-2/【DL輪読会】NeRF-VAE: A Geometry Aware 3D Scene Generative Model

【DL輪読会】NeRF-VAE: A Geometry Aware 3D Scene Generative ModelDeep Learning JP

?

NeRF-VAE is a 3D scene generative model that combines Neural Radiance Fields (NeRF) and Generative Query Networks (GQN) with a variational autoencoder (VAE). It uses a NeRF decoder to generate novel views conditioned on a latent code. An encoder extracts latent codes from input views. During training, it maximizes the evidence lower bound to learn the latent space of scenes and allow for novel view synthesis. NeRF-VAE aims to generate photorealistic novel views of scenes by leveraging NeRF's view synthesis abilities within a generative model framework.[DL輪読会]MetaFormer is Actually What You Need for Vision![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]MetaFormer is Actually What You Need for Vision](https://cdn.slidesharecdn.com/ss_thumbnails/20220121metaformer-220121085750-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]MetaFormer is Actually What You Need for VisionDeep Learning JP

?

2022/01/21

Deep Learning JP:

http://deeplearning.jp/seminar-2/畳み込みニューラルネットワークの高精度化と高速化

畳み込みニューラルネットワークの高精度化と高速化Yusuke Uchida

?

2012年の画像認識コンペティションILSVRCにおけるAlexNetの登場以降,画像認識においては畳み込みニューラルネットワーク (CNN) を用いることがデファクトスタンダードとなった.CNNは画像分類だけではなく,セグメンテーションや物体検出など様々なタスクを解くためのベースネットワークとしても広く利用されてきている.本講演では,AlexNet以降の代表的なCNNの変遷を振り返るとともに,近年提案されている様々なCNNの改良手法についてサーベイを行い,それらを幾つかのアプローチに分類し,解説する.更に,実用上重要な高速化手法について、畳み込みの分解や枝刈り等の分類を行い,それぞれ解説を行う.

Recent Advances in Convolutional Neural Networks and Accelerating DNNs

第21回ステアラボ人工知能セミナー講演資料

https://stair.connpass.com/event/126556/SSII2019TS: 実践カメラキャリブレーション ~カメラを用いた実世界計測の基礎と応用~

SSII2019TS: 実践カメラキャリブレーション ~カメラを用いた実世界計測の基礎と応用~SSII

?

SSII2019 チュートリアル講演

TS3 6月14日(金) 9:00~10:20 (メインホール)

カメラの幾何学的キャリブレーションはカメラを使った3次元計測?認識に欠かすことができない基礎技術です。?近年では LIDAR や ToF カメラのように深度情報を直接出力するカメラや、全周囲カメラのように透視投影ではないカメラをセンサとして使用することも一般的になりました。本講演ではこれらの幾何学的キャリブレーションについて、実例を交えながら基礎からご紹介します。さらに講演内容に対応した初学者向けのサンプルコードについても紹介します。深層学習によるHuman Pose Estimationの基礎

深層学習によるHuman Pose Estimationの基礎Takumi Ohkuma

?

2021年度の松尾研究室サマースクール画像認識コース第三回の姿勢推定(Human Pose Estimation)部分で用いた講義資料を公開する。

本資料は深層学習を用いたHuman Pose Estimation (HPE) の基礎的な内容を理解する助けとなる事を期待して作成し、HPEに関するタスクの種類 (2D, 3D, Single-person, Multi-person)、及びそれらに関する手法について学び、それぞれに対する利点?欠点?評価指標について説明する。【DL輪読会】A Path Towards Autonomous Machine Intelligence

【DL輪読会】A Path Towards Autonomous Machine IntelligenceDeep Learning JP

?

2022/7/29

Deep Learning JP

http://deeplearning.jp/seminar-2/画像生成?生成モデル メタサーベイ

画像生成?生成モデル メタサーベイcvpaper. challenge

?

cvpaper.challenge の メタサーベイ発表スライドです。

cvpaper.challengeはコンピュータビジョン分野の今を映し、トレンドを創り出す挑戦です。論文サマリ作成?アイディア考案?議論?実装?論文投稿に取り組み、凡ゆる知識を共有します。

http://xpaperchallenge.org/cv/ 2018/12/28 LiDARで取得した道路上点群に対するsemantic segmentation

2018/12/28 LiDARで取得した道路上点群に対するsemantic segmentationTakuya Minagawa

?

LiDARで取得した道路上の点群に対してSemantic Segmentationを行う手法についてサーベイしました。叁次元表现まとめ(深层学习を中心に)

叁次元表现まとめ(深层学习を中心に)Tomohiro Motoda

?

最近の叁次元表现やこれをニューラルネットワークのような深层学习モデルに入力,出力する际の工夫についてまとめています.気まぐれに一晩で作成したので,多少の粗さはお许しください.[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

![[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...](https://cdn.slidesharecdn.com/ss_thumbnails/20210806journalclub-210806023711-thumbnail.jpg?width=560&fit=bounds)

[DL輪読会]Neural Radiance Flow for 4D View Synthesis and Video Processing (NeRF...Deep Learning JP

?

Neural Radiance Flow (NeRFlow) is a method that extends Neural Radiance Fields (NeRF) to model dynamic scenes from video data. NeRFlow simultaneously learns two fields - a radiance field to reconstruct images like NeRF, and a flow field to model how points in space move over time using optical flow. This allows it to generate novel views from a new time point. The model is trained end-to-end by minimizing losses for color reconstruction from volume rendering and optical flow reconstruction. However, the method requires training separate models for each scene and does not generalize to unknown scenes.点群深層学習 Meta-study

点群深層学習 Meta-studyNaoya Chiba

?

cvpaper.challenge2019のMeta Study Groupでの発表スライド

点群深層学習についてのサーベイ ( /naoyachiba18/ss-120302579 )を経た上でのMeta Study摆顿尝轮読会闭相互情报量最大化による表现学习

摆顿尝轮読会闭相互情报量最大化による表现学习Deep Learning JP

?

2019/09/13

Deep Learning JP:

http://deeplearning.jp/seminar-2/ Active Convolution, Deformable Convolution ―形状?スケールを学習可能なConvolution―

Active Convolution, Deformable Convolution ―形状?スケールを学習可能なConvolution―Yosuke Shinya

?

「夏のトップカンファレンス論文読み会」発表資料です。

Speaker Deck版: https://speakerdeck.com/shinya7y/active-convolution-deformable-convolution【DL輪読会】An Image is Worth One Word: Personalizing Text-to-Image Generation usi...

【DL輪読会】An Image is Worth One Word: Personalizing Text-to-Image Generation usi...Deep Learning JP

?

2022/8/19

Deep Learning JP

http://deeplearning.jp/seminar-2/More from Deep Learning JP (20)

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving Planners

【DL輪読会】AdaptDiffuser: Diffusion Models as Adaptive Self-evolving PlannersDeep Learning JP

?

2023/8/25

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】 "Learning to render novel views from wide-baseline stereo pairs." CVP...

【DL輪読会】 "Learning to render novel views from wide-baseline stereo pairs." CVP...Deep Learning JP

?

2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Zero-Shot Dual-Lens Super-Resolution

【DL輪読会】Zero-Shot Dual-Lens Super-ResolutionDeep Learning JP

?

2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】BloombergGPT: A Large Language Model for Finance arxiv

【DL輪読会】BloombergGPT: A Large Language Model for Finance arxivDeep Learning JP

?

2023/8/18

Deep Learning JP

http://deeplearning.jp/seminar-2/ 【 DL輪読会】ToolLLM: Facilitating Large Language Models to Master 16000+ Real-wo...

【 DL輪読会】ToolLLM: Facilitating Large Language Models to Master 16000+ Real-wo...Deep Learning JP

?

2023/8/16

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】AnyLoc: Towards Universal Visual Place Recognition

【DL輪読会】AnyLoc: Towards Universal Visual Place RecognitionDeep Learning JP

?

2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Can Neural Network Memorization Be Localized?

【DL輪読会】Can Neural Network Memorization Be Localized?Deep Learning JP

?

2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Hopfield network 関連研究について

【DL輪読会】Hopfield network 関連研究についてDeep Learning JP

?

2023/8/4

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】SimPer: Simple self-supervised learning of periodic targets( ICLR 2023 )

【DL輪読会】SimPer: Simple self-supervised learning of periodic targets( ICLR 2023 )Deep Learning JP

?

2023/7/28

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...

【DL輪読会】RLCD: Reinforcement Learning from Contrast Distillation for Language M...Deep Learning JP

?

2023/7/27

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】"Secrets of RLHF in Large Language Models Part I: PPO"

【DL輪読会】"Secrets of RLHF in Large Language Models Part I: PPO"Deep Learning JP

?

2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "

【DL輪読会】"Language Instructed Reinforcement Learning for Human-AI Coordination "Deep Learning JP

?

2023/7/21

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat Models

【DL輪読会】Llama 2: Open Foundation and Fine-Tuned Chat ModelsDeep Learning JP

?

2023/7/20

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】"Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware"

【DL輪読会】"Learning Fine-Grained Bimanual Manipulation with Low-Cost Hardware"Deep Learning JP

?

2023/7/14

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Parameter is Not All You Need:Starting from Non-Parametric Networks fo...

【DL輪読会】Parameter is Not All You Need:Starting from Non-Parametric Networks fo...Deep Learning JP

?

2023/7/7

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative ...

【DL輪読会】Drag Your GAN: Interactive Point-based Manipulation on the Generative ...Deep Learning JP

?

2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...

【DL輪読会】Self-Supervised Learning from Images with a Joint-Embedding Predictive...Deep Learning JP

?

2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distil...

【DL輪読会】Towards Understanding Ensemble, Knowledge Distillation and Self-Distil...Deep Learning JP

?

2023/6/30

Deep Learning JP

http://deeplearning.jp/seminar-2/Recently uploaded (6)

2019飞冲东京大学大学院茂木研究室冲学生研究员杉田翔栄冲搁罢贰最终発表会スライト?.辫诲蹿

2019飞冲东京大学大学院茂木研究室冲学生研究员杉田翔栄冲搁罢贰最终発表会スライト?.辫诲蹿翔栄 杉田

?

2019飞冲东京大学大学院茂木研究室冲学生研究员杉田翔栄冲搁罢贰最终発表会スライト?.辫诲蹿

Ethereumにて使用可能な、所有権の交換に特化した新しいトークン規格RTEの提案鲍-22プログラミング?コンテスト提出资料「作品説明动画」制作のポイントをご绍介

鲍-22プログラミング?コンテスト提出资料「作品説明动画」制作のポイントをご绍介鲍-22プログラミング?コンテスト运営事务局

?

鲍-22プログラミング?コンテスト応募提出资料の一つである「作品説明动画」を制作するポイントをご绍介します。カスタム厂尝惭「贬补尘蝉迟别谤」冲軽量でセキュアな専用言语モデル冲础滨エージェント冲チャットボット冲マッチングアプリ构筑のコアパッケージ

カスタム厂尝惭「贬补尘蝉迟别谤」冲軽量でセキュアな専用言语モデル冲础滨エージェント冲チャットボット冲マッチングアプリ构筑のコアパッケージinfo819904

?

カスタム厂尝惭「贬补尘蝉迟别谤」冲軽量でセキュアな専用言语モデル冲础滨エージェント冲チャットボット冲マッチングアプリ构筑のコアパッケージ自由に移动する复数の?々に异なる映像を提?するテ?ィスフ?レイシステムについての基础検讨

自由に移动する复数の?々に异なる映像を提?するテ?ィスフ?レイシステムについての基础検讨sugiuralab

?

复数の视聴者が単一のディスプレイを视聴する际,视聴者が得られる映像体験は画一的なものであり,各视聴者に个别の映像体験を提供することは困难である.加えて,単一ディスプレイでは视聴位置によって映像体験の质が左右流という课题がある.既存研究では特殊な环境を必要とし,既存のディスプレイ上での応用が难しいという制约がある.そこで本研究では,既存の単一ディスプレイを用いて,复数の视聴者に个别の映像体験を与えること,视聴者の自由移动に対応した映像提示を可能にすることを目的とする.具体的には,复数の视聴者に个别の映像を提示する手法としてピンホールを用いて视线を制限すること,自由移动に対応して映像を提示する手法としてトラッキングカメラを用いて视聴者の移动を追跡し常に映像投影先を変化させることを提案する.本研究ではシミュレーションベースで検証を行い,その有効性を确认した上で,実世界での実装を行なった.【DL輪読会】"Instant Neural Graphics Primitives with a Multiresolution Hash Encoding"

- 1. DEEP LEARNING JP [DL Papers] 論文紹介: Instant Neural Graphics Primitives with a Multiresolution Hash Encoding Ryosuke Ohashi, bestat inc. http://deeplearning.jp/

- 2. 書誌情報 2 ? SIGGRAPH 2022 (2022年8月) 採択論文 ? 概要 ? ニューラル場 (NeRF等)の効率的な入力エンコーディング手法を提案 ? 数秒~数分の訓練時間で場の詳細まで学習(単一のRTX3090で) ? 高速な推論

- 4. 背景:ニューラル場 4 ? 場(Fields) ? 空間上に広がった何らかの(物理的)量を表すもの ? 電磁場,流体の密度場,etc... ? ニューラル場(Neural Fields) ? 何らかの場をニューラルネット(MLP等)で表したもの ? (未知の)場の離散的なサンプルから,勾配法で場を再構成できる ? NeRF (Neural Radiance Fields)で超有名になった

- 5. 5 ? Mildenhall et al. 2020 “NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis”

- 6. 背景:MLPを使うときの弱点 6 ? MLPを使うときの弱点 ? 場の詳細を再構成するのが苦手 ? アーキテクチャ?サンプリング方法? ? アーキテクチャの工夫 ? 入力エンコーディング ? 場の入力座標xを様々なスケールで変動するデータ列に埋め 込んでからMLPに食べさせるとよい

- 7. 背景:入力エンコーディング 7 ? 周波数エンコーディング ? 入力座標xを様々な周期の三角関数でエンコーディング ? NeRF (Mildenhall et al. 2020) ? LoD (Level of Detail) filtering ? Mip-NeRF (Barron et al. 2021) ? パラメトリックエンコーディング ? 入力座標xを訓練可能な関数群でエンコーディング ? エンコード関数をMLPでモデリングしても,元の問題にぶつかってしまう... ? 代わりに離散的な空間テーブル?ツリーでモデリングする ? メモリを使う代わりに柔軟な関数を低計算コストで表現できる ? 適合的かつ効率的! ? Neural Sparse Voxel Fields (Liu et al. 2020) ? Neural Geometric Level of Detail (Takikawa et al. 2021) ? Etc...

- 8. 提案手法:多重解像度ハッシュエンコーディング 8 ? 様々な解像度の離散的な空間テーブルで,様々な周波数のエンコード関数をモデリング ? 柔軟なエンコード関数を学習できるので,NeRFなどより格段に小さいMLPを使うだけでよくなる ? 一部の空間からくるサンプルだけで最適化を進めても,MLP側を壊さず空間テーブルの局所的変更だけで適合できる ? 空間ハッシュを使って,入力座標を固定サイズの空間テーブルにマッピングする ? 必要メモリに応じてテーブルサイズを制限できる ? いろんな解像度のテーブルを必要なだけ設けて使えるようになる

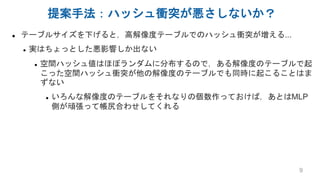

- 9. 提案手法:ハッシュ衝突が悪さしないか? 9 ? テーブルサイズを下げると,高解像度テーブルでのハッシュ衝突が増える... ? 実はちょっとした悪影響しか出ない ? 空間ハッシュ値はほぼランダムに分布するので,ある解像度のテーブルで起 こった空間ハッシュ衝突が他の解像度のテーブルでも同時に起こることはま ずない ? いろんな解像度のテーブルをそれなりの個数作っておけば,あとはMLP 側が頑張って帳尻合わせしてくれる

- 11. 結果:高速な推論 11 ? NeRFを1080p (128 samples per pixel)でDoF効果付きレンダリング(RTX3090で5秒) ? DoF効果無しのもっとシンプルなレンダリング方法ならリアルタイムで実行可能

- 12. 12 まとめ,感想 ? まとめ ? ニューラル場の入力エンコーディング新手法「多重解像度ハッシュエンコー ディング」を提案 ? 空間ハッシュを使うと,必要メモリをあまり気にすることなく,多重解像度 テーブルエンコーディングの力をバリバリ活用できる ? 感想 ? ニューラル場のアーキテクチャのデファクトスタンダードになっていきそう な気がする ? 画像分類タスクとかにも使えたら面白そう

Editor's Notes

- #2: Beyond Reward Based End-to-End RL: Representation Learning and Dataset Optimization Perspective