1 of 14

Downloaded 17 times

Recommended

The Forward-Forward Algorithm

The Forward-Forward Algorithmtaeseon ryu

?

? ??? ??? ??? ?? ??? ??? ?????, ? ??? ??? 'Forward-Forward ????'???. ??? ??? ??? ???? ??? ??? ??? ?? ?(backward)? ??? '?-?' ????, ? ??? ??? '?-?' ??? ????? 'Forward-Forward'?? ????.

? ??????? '?? ???'? '???? ???' ? ??? ?????. '?? ???'? ??? ??? ?? ??? ????, '???? ???'? ???? ??? ???? ??? ????. ? ? ??? ???? ?? ??? ???, ? ??? '?? ???'? ??? ?? ???, '???? ???'? ??? ?? ??? ??? ?????.

??? ???? ?? ??? ??? ??, ? ????? ???? ??? ??? ? ??? ??? ????. ? ??? ???? ??? ?? ???? ???? ?? ????? ??? ??? ????? ???? ??? ?? ?? ??? ? ??? ?? ? ?????.1???? GAN(Generative Adversarial Network) ?? ????

1???? GAN(Generative Adversarial Network) ?? ????NAVER Engineering

?

???: ???(??? ????)

??? (Yunjey Choi)? ??????? ?????? ??????, ??? ?????? Machine Learning? ???? ?? ????. ??? ???? ??? ?? ?? ????? ???? ?? ????. 1? ? TensorFlow? ???? Deep Learning? ????? ??? PyTorch? ???? Generative Adversarial Network? ???? ??. TensorFlow? ?? ???? ??, PyTorch Tutorial? ??? Github? ??? ??? ?? ??.

??:

Generative Adversarial Network(GAN)? 2014? Ian Goodfellow? ?? ???? ??????, ??? ??? ?? ?? ???? ??? ???? ?? ?????. ?? ?? GAN? ?? ???? ?? ??? ???? ?? ???? ? ?? ?? ???? ??? ??? ????.

? ?? ??? ??? ?? GAN ???? ? ??? ?????? ?????. ???? GAN? ???? ????? ?? ??? ???? ?? ??? ? ????.

?? ??? ?? ?? GAN? ?? ?? ?? ?? ??? ??????? ???. GAN? ?? ???? ??, GAN? ?? ???? ??? ????? ??, GAN? ??? ??? ? ??? ????? ??? ??? ??? ?? ? ????.

????: https://youtu.be/odpjk7_tGY0?????? ?? ?

?????? ?? ?NAVER Engineering

?

???: ???(NAVER)

???: 2017.11.

?? ??? ??? ?????? ??????? ??? ?? ??? ?? ?? ????. ? ????? ?????? ?? ???? ??? ?????? ?? ?? ??? ????? ???. ?? ?????? ?? ?? ????Autoencoder? (AE) ? ?? ?? Denoising AE, Contractive AE? ??? ??? ???, ??? ?? ???? ?? ?? ?? Variational AE? (VAE) ? ?? ?? Conditional VAE, Adversarial AE? ??? ??? ????. ??, ?????? ??? ?? ??? ?????? ???? ??? ????? ??? ????.

1. Revisit Deep Neural Networks

2. Manifold Learning

3. Autoencoders

4. Variational Autoencoders

5. ApplicationsĄąīØĄ─╔·│╔ź═ź├ź╚ź’®`ź»Ż©│ę┤Ī▒ĘŻ®

ĄąīØĄ─╔·│╔ź═ź├ź╚ź’®`ź»Ż©│ę┤Ī▒ĘŻ®cvpaper. challenge

?

cvpaper.challenge ż╬ Meta Study Group ░k▒Ēź╣źķźżź╔

cvpaper.challenge żŽź│ź¾źįźÕ®`ź┐źėźĖźńź¾Ęųę░ż╬Į±ż“ė│żĘĪóź╚źņź¾ź╔ż“äōżĻ│÷ż╣╠¶æķżŪż╣ĪŻšō╬─źĄź▐źĻ?źóźżźŪźŻźó┐╝░Ė?ūhšō?īgū░?šō╬─═ČĖÕż╦╚ĪżĻĮMż▀ĪóĘ▓żµżļų¬ūRż“╣▓ėążĘż▐ż╣ĪŻ2019ż╬─┐ś╦ĪĖź╚ź├źū╗ßūh30+▒Š═ČĖÕĪ╣ĪĖ2╗žęį╔Žż╬ź╚ź├źū╗ßūhŠW┴_Ą─źĄ®`ź┘źżĪ╣

http://xpaperchallenge.org/cv/[DL▌åši╗ß]Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images![[DL▌åši╗ß]Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images](https://cdn.slidesharecdn.com/ss_thumbnails/pixel2mesh-181012004419-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images](https://cdn.slidesharecdn.com/ss_thumbnails/pixel2mesh-181012004419-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]Pixel2Mesh: Generating 3D Mesh Models from Single RGB ImagesDeep Learning JP

?

2018/10/12

Deep Learning JP:

http://deeplearning.jp/seminar-2/ĪŠšō╬─ĮBĮķĪ┐How Powerful are Graph Neural Networks?

ĪŠšō╬─ĮBĮķĪ┐How Powerful are Graph Neural Networks?Masanao Ochi

?

ūŅĮ³ż╬│ę▒Ę▒Ęż╬ļOĮėź╬®`ź╔Ūķ▒©ż╬╝»į╝ĘĮĘ©żõę╗▓Ńźč®`ź╗źūź╚źĒź¾ż╦żĶżļ╗²▓Ńż╬Ž▐Įńż“ųĖš¬żĘż┐┬█╬─Ż«Wasserstein GAN ?? ???? I

Wasserstein GAN ?? ???? ISungbin Lim

?

? ????? Martin Arjovsky, Soumith Chintala, L©”on Bottou ? Wasserstein GAN (https://arxiv.org/abs/1701.07875v2) ?? ? Example 1 ? ???? ?????[DL▌åši╗ß]Model-Based Reinforcement Learning via Meta-Policy Optimization![[DL▌åši╗ß]Model-Based Reinforcement Learning via Meta-Policy Optimization](https://cdn.slidesharecdn.com/ss_thumbnails/model-basedreinforcementlearningviameta-policyoptimization-190705000247-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Model-Based Reinforcement Learning via Meta-Policy Optimization](https://cdn.slidesharecdn.com/ss_thumbnails/model-basedreinforcementlearningviameta-policyoptimization-190705000247-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Model-Based Reinforcement Learning via Meta-Policy Optimization](https://cdn.slidesharecdn.com/ss_thumbnails/model-basedreinforcementlearningviameta-policyoptimization-190705000247-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Model-Based Reinforcement Learning via Meta-Policy Optimization](https://cdn.slidesharecdn.com/ss_thumbnails/model-basedreinforcementlearningviameta-policyoptimization-190705000247-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]Model-Based Reinforcement Learning via Meta-Policy OptimizationDeep Learning JP

?

2019/07/05

Deep Learning JP:

http://deeplearning.jp/seminar-2/ ▒ß▓Ō▒Ķ▒░∙┤Ū▒Ķ│┘ż╚żĮż╬ų▄▐xż╦ż─żżżŲ

▒ß▓Ō▒Ķ▒░∙┤Ū▒Ķ│┘ż╚żĮż╬ų▄▐xż╦ż─żżżŲKeisuke Hosaka

?

Hyperoptż╬įŁšō╬─ż“šiż¾żŪHyperoptż“ĮŌšhżĘż┐┘Y┴ŽżŪż╣ĪŻ

Ė┼ꬿ└ż▒żŪż╩ż»╝Üż½żżż╚ż│żĒżŌżŪżŁżļż└ż▒šh├„ż╣żļżĶż”ż╦żĘżŲżżż▐ż╣ĪŻĪŠšō╬─šiż▀╗ßĪ┐Self-Attention Generative Adversarial Networks

ĪŠšō╬─šiż▀╗ßĪ┐Self-Attention Generative Adversarial NetworksARISE analytics

?

šō╬─ĪĖSelf-Attention Generative Adversarial NetworksĪ╣ż╦ż─żżżŲ▌åšiżĘż┐ļHż╬┘Y┴ŽżŪż╣ĪŻĪŠLT┘Y┴ŽĪ┐ Neural Network ╦ž╚╦ż╩ż¾ż└ż▒ż╔║╬ż╚ż½ż┤ÖCŽė╚ĪżĻż“żĘż┐żż

ĪŠLT┘Y┴ŽĪ┐ Neural Network ╦ž╚╦ż╩ż¾ż└ż▒ż╔║╬ż╚ż½ż┤ÖCŽė╚ĪżĻż“żĘż┐żżTakuji Tahara

?

[Kaggle Tokyo Meetup #6](https://connpass.com/event/132935/) żŪż╬ LT ┘Y┴ŽżŪż╣ĪŻČ╠żżż╬żŪź┐źżź╚źļįpŲ█ĖążóżĻż▐ż╣ż¼ż¬įSżĘż»ż└żĄżżĪŻ

─┌╚▌Ą─ż╦żŽč¦┴Ģ┬╩ż├żŲ┤¾╩┬ż└żĶż═ż╚čįż”ż¬Ü▌│ųż┴ż¼╚½żŲĪŻ

ĪŠūĘėøĪ┐

p.16 ż╬ ĪĖAdam ╣╠Č©żŪż”ż▐ż»ąąż»╚╦Ī®żŌżżżļĪ╣ż╦ż─żżżŲĪó┴„╩»ż╦ scheduling żŽżĘżŲżļż╬żŪżŽż╩żżż½ż╚(¼FĄžżŪ)ź│źßź¾ź╚ż“ĒöżŁż▐żĘż┐ĪŻ"╣╠Č©"żŽżóż»ż▐żŪ optimizer ż╬įÆżŪżóż├żŲĪóscheduling ż╩żĘż╬ Adam żŽżĮż│ż▐żŪėą─▄żŪżŽż╩żżż╚ż╬ż│ż╚ĪŻ

ż│żņżŽāWż╬ĮU“Yż╚żŌę╗ų┬żĘżŲżżż▐ż╣ĪŻGenerative ModelsŻ©źßź┐źĄ®`ź┘źż Ż®

Generative ModelsŻ©źßź┐źĄ®`ź┘źż Ż®cvpaper. challenge

?

cvpaper.challenge ż╬ źßź┐źĄ®`ź┘źż░k▒Ēź╣źķźżź╔żŪż╣ĪŻ

cvpaper.challengeżŽź│ź¾źįźÕ®`ź┐źėźĖźńź¾Ęųę░ż╬Į±ż“ė│żĘĪóź╚źņź¾ź╔ż“äōżĻ│÷ż╣╠¶æķżŪż╣ĪŻšō╬─źĄź▐źĻū„│╔?źóźżźŪźŻźó┐╝░Ė?ūhšō?īgū░?šō╬─═ČĖÕż╦╚ĪżĻĮMż▀ĪóĘ▓żµżļų¬ūRż“╣▓ėążĘż▐ż╣ĪŻ2020ż╬─┐ś╦żŽĪĖź╚ź├źū╗ßūh30+▒Š═ČĖÕĪ╣ż╣żļż│ż╚żŪż╣ĪŻ

http://xpaperchallenge.org/cv/ Į╠Ĥż╩żĘ╗ŁŽ±╠žÅš▒Ē¼Fč¦┴Ģż╬äėŽ“ {Un, Self} supervised representation learning (CVPR 2018 ═Ļ╚½šiŲŲ...

Į╠Ĥż╩żĘ╗ŁŽ±╠žÅš▒Ē¼Fč¦┴Ģż╬äėŽ“ {Un, Self} supervised representation learning (CVPR 2018 ═Ļ╚½šiŲŲ...cvpaper. challenge

?

CVPR 2018 ═Ļ╚½šiŲŲź┴źŃźņź¾źĖł¾Ėµ╗ß cvpaper.challenge ├ŃÅŖ╗ß@Wantedly░ūĮ╠©ź¬źšźŻź╣

cvpaper.challenge żŽź│ź¾źįźÕ®`ź┐źėźĖźńź¾Ęųę░ż╬Į±ż“ė│żĘĪóäōżĻ│÷ż╣╠¶æķżŪż╣ĪŻšō╬─šiŲŲ?ż▐ż╚żß?źóźżźŪźŻźó┐╝░Ė?ūhšō?īgū░?šō╬─ł╠╣PŻ©?╔ń╗ßīgū░Ż®ż╦ų┴żļż▐żŪÄ┌ż»╚ĪżĻĮMż▀Īóżóżķżµżļų¬ūRż“╣▓ėążĘżŲżżż▐ż╣ĪŻ

http://xpaperchallenge.org/cv/ĪŠDL▌åši╗ßĪ┐čįšZęį═ŌżŪż╬Transformerż╬ż▐ż╚żß (ViT, Perceiver, Frozen Pretrained Transformer etc)

ĪŠDL▌åši╗ßĪ┐čįšZęį═ŌżŪż╬Transformerż╬ż▐ż╚żß (ViT, Perceiver, Frozen Pretrained Transformer etc)Deep Learning JP

?

This document summarizes recent research on applying self-attention mechanisms from Transformers to domains other than language, such as computer vision. It discusses models that use self-attention for images, including ViT, DeiT, and T2T, which apply Transformers to divided image patches. It also covers more general attention modules like the Perceiver that aims to be domain-agnostic. Finally, it discusses work on transferring pretrained language Transformers to other modalities through frozen weights, showing they can function as universal computation engines.ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»╚ļ├┼

ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»╚ļ├┼ryosuke-kojima

?

https://math-coding.connpass.com/event/147508/ Math&coding#6 ż╬░k▒Ē┘Y┴Ž[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...Deep Learning JP

?

2019/08/16

Deep Learning JP:

http://deeplearning.jp/seminar-2/Positive-Unlabeled Learning with Non-Negative Risk Estimator

Positive-Unlabeled Learning with Non-Negative Risk EstimatorKiryo Ryuichi

?

▒Ę▒§▒╩│¦2017šiż▀╗ßż╬░k▒Ēū╩┴ŽżŪż╣ĪŻ[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based PoliciesDeep Learning JP

?

2017/4/7

Deep Learning JP:

http://deeplearning.jp/seminar-2/

SSII2020SS: ź░źķźšźŪ®`ź┐żŪżŌ╔Ņīėč¦┴Ģ ? Graph Neural Networks ╚ļķT ?

SSII2020SS: ź░źķźšźŪ®`ź┐żŪżŌ╔Ņīėč¦┴Ģ ? Graph Neural Networks ╚ļķT ?SSII

?

SSII2020 ╝╝ągäėŽ“ĮŌšhź╗ź├źĘźńź¾ SS1

6/11 (─Š) 14:00Ī½14:30ĪĪźßźżź¾╗ßł÷ (vimeo + sli.do)

ź░źķźšśŗįņż“żŌż─źŪ®`ź┐ż╦īØż╣żļ DNNĪóż╣ż╩ż’ż┴ Graph Neural Networks (GNNs) ż╬蹊┐żŽż│ż╬Ż▓ĪóŻ│─ĻżŪ▓╬╝ėż╣żļ蹊┐š▀ż¼╝▒ēłżĘżŲżżżļĪŻ¼Fū┤ĪóśöĪ®ż╩źó®`źŁźŲź»ź┴źŃż╬ GNN ż¼śöĪ®ż╩ź╔źßźżź¾żõśöĪ®ż╩ź┐ź╣ź»żŪéĆäeż╦╠ß░ĖżĄżņĪóĖ┼ėQż“ūĮż©żļż╬żŌ║åģgżŪżŽż╩żżū┤æBż╦ż╩ż├żŲżżżļĪŻ▒Šź┴źÕ®`ź╚źĻźóźļżŽĪóÄ┌╣Āż╦╔óżķżąż├ż┐ GNN 蹊┐ż╬¼Fū┤ż╦ż─żżżŲż╬Ė┼ėQż╚╗∙▒P╝╝ągż“ĮBĮķż╣żļż╚ż╚żŌż╦ĪóĢrķgż¼įSż╣╣ĀćņżŪź│ź¾źįźÕ®`ź┐źėźĖźńź¾ŅIė“ż╦ż¬ż▒żļÅĻė├└²ż╬ĮBĮķż╦żŌ╚ĪżĻĮMż▀ż┐żżĪŻ«Æż▀▐zż▀ź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╬Ė▀Š½Č╚╗»ż╚Ė▀╦┘╗»

«Æż▀▐zż▀ź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╬Ė▀Š½Č╚╗»ż╚Ė▀╦┘╗»Yusuke Uchida

?

2012─Ļż╬╗ŁŽ±šJūRź│ź¾ź┌źŲźŻźĘźńź¾ILSVRCż╦ż¬ż▒żļAlexNetż╬ĄŪł÷ęįĮĄŻ¼╗ŁŽ±šJūRż╦ż¬żżżŲżŽ«Æż▀▐zż▀ź╦źÕ®`źķźļź═ź├ź╚ź’®`ź» (CNN) ż“ė├żżżļż│ż╚ż¼źŪźšźĪź»ź╚ź╣ź┐ź¾ź└®`ź╔ż╚ż╩ż├ż┐Ż«CNNżŽ╗ŁŽ±ĘųŅÉż└ż▒żŪżŽż╩ż»Ż¼ź╗ź░źßź¾źŲ®`źĘźńź¾żõ╬’╠ÕŚ╩│÷ż╩ż╔śöĪ®ż╩ź┐ź╣ź»ż“ĮŌż»ż┐żßż╬ź┘®`ź╣ź═ź├ź╚ź’®`ź»ż╚żĘżŲżŌÄ┌ż»└¹ė├żĄżņżŲżŁżŲżżżļŻ«▒Šųvč▌żŪżŽŻ¼AlexNetęįĮĄż╬┤·▒ĒĄ─ż╩CNNż╬ēõ▀wż“š±żĻĘĄżļż╚ż╚żŌż╦Ż¼Į³─Ļ╠ß░ĖżĄżņżŲżżżļśöĪ®ż╩CNNż╬Ė─┴╝╩ųĘ©ż╦ż─żżżŲźĄ®`ź┘źżż“ąążżŻ¼żĮżņżķż“Äūż─ż½ż╬źóźūźĒ®`ź┴ż╦ĘųŅɿʯ¼ĮŌšhż╣żļŻ«Ė³ż╦Ż¼īgė├╔Žųžę¬ż╩Ė▀╦┘╗»╩ųĘ©ż╦ż─żżżŲĪó«Æż▀▐zż▀ż╬ĘųĮŌżõų”žūżĻĄ╚ż╬ĘųŅÉż“ąążżŻ¼żĮżņżŠżņĮŌšhż“ąąż”Ż«

Recent Advances in Convolutional Neural Networks and Accelerating DNNs

Ą┌21╗žź╣źŲźóźķź▄╚╦╣żų¬─▄ź╗ź▀ź╩®`ųvč▌┘Y┴Ž

https://stair.connpass.com/event/126556/ĪŠDL▌åši╗ßĪ┐GPT-4Technical Report

ĪŠDL▌åši╗ßĪ┐GPT-4Technical ReportDeep Learning JP

?

2023/4/28

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image TranslationDeep Learning JP

?

2019/05/17

Deep Learning JP:

http://deeplearning.jp/seminar-2/ ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╚ź░źķźšūķ║Žż╗╬╩╠Ō

ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╚ź░źķźšūķ║Žż╗╬╩╠Ōjoisino

?

ęįŽ┬ż╬Č■ż─ż╬šō╬─ż╬ĮBĮķż“ųąą─ż╦Īóź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╚ź░źķźšūķ║Žż╗╬╩╠Ōż╬Į╗ż’żĻż╦ż─żżżŲĮŌšhżĘż▐żĘż┐ĪŻ SIG-FPAI żŪż╬šą┤²ųvč▌ż╬─┌╚▌ż╦╔┘żĘą▐š²ż“╝ėż©ż┐żŌż╬żŪż╣ĪŻ

* Learning Combinatorial Optimization Algorithm over Graphs (NIPS 2017)

* Approximation Ratios of Graph Neural Networks for Combinatorial Problems (NeurIPS 2019)[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...Deep Learning JP

?

2017/9/4

Deep Learning JP:

http://deeplearning.jp/seminar-2/░┐▒Ķ│┘Š▒│ŠŠ▒│·▒░∙╚ļ├┼Ż”ūŅą┬Č»Ž“

░┐▒Ķ│┘Š▒│ŠŠ▒│·▒░∙╚ļ├┼Ż”ūŅą┬Č»Ž“Motokawa Tetsuya

?

4/16ż╦ąąż’żņż┐ų■▓©┤¾ż╬╩ųēV╚¶┴ųčą║Ž═¼ź╝ź▀żŪż╬░k▒Ēź╣źķźżź╔żŪż╣ĪŻ[DL▌åši╗ß] Residual Attention Network for Image Classification![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß] Residual Attention Network for Image ClassificationDeep Learning JP

?

2017/9/4

Deep Learning JP:

http://deeplearning.jp/seminar-2/More Related Content

What's hot (20)

▒ß▓Ō▒Ķ▒░∙┤Ū▒Ķ│┘ż╚żĮż╬ų▄▐xż╦ż─żżżŲ

▒ß▓Ō▒Ķ▒░∙┤Ū▒Ķ│┘ż╚żĮż╬ų▄▐xż╦ż─żżżŲKeisuke Hosaka

?

Hyperoptż╬įŁšō╬─ż“šiż¾żŪHyperoptż“ĮŌšhżĘż┐┘Y┴ŽżŪż╣ĪŻ

Ė┼ꬿ└ż▒żŪż╩ż»╝Üż½żżż╚ż│żĒżŌżŪżŁżļż└ż▒šh├„ż╣żļżĶż”ż╦żĘżŲżżż▐ż╣ĪŻĪŠšō╬─šiż▀╗ßĪ┐Self-Attention Generative Adversarial Networks

ĪŠšō╬─šiż▀╗ßĪ┐Self-Attention Generative Adversarial NetworksARISE analytics

?

šō╬─ĪĖSelf-Attention Generative Adversarial NetworksĪ╣ż╦ż─żżżŲ▌åšiżĘż┐ļHż╬┘Y┴ŽżŪż╣ĪŻĪŠLT┘Y┴ŽĪ┐ Neural Network ╦ž╚╦ż╩ż¾ż└ż▒ż╔║╬ż╚ż½ż┤ÖCŽė╚ĪżĻż“żĘż┐żż

ĪŠLT┘Y┴ŽĪ┐ Neural Network ╦ž╚╦ż╩ż¾ż└ż▒ż╔║╬ż╚ż½ż┤ÖCŽė╚ĪżĻż“żĘż┐żżTakuji Tahara

?

[Kaggle Tokyo Meetup #6](https://connpass.com/event/132935/) żŪż╬ LT ┘Y┴ŽżŪż╣ĪŻČ╠żżż╬żŪź┐źżź╚źļįpŲ█ĖążóżĻż▐ż╣ż¼ż¬įSżĘż»ż└żĄżżĪŻ

─┌╚▌Ą─ż╦żŽč¦┴Ģ┬╩ż├żŲ┤¾╩┬ż└żĶż═ż╚čįż”ż¬Ü▌│ųż┴ż¼╚½żŲĪŻ

ĪŠūĘėøĪ┐

p.16 ż╬ ĪĖAdam ╣╠Č©żŪż”ż▐ż»ąąż»╚╦Ī®żŌżżżļĪ╣ż╦ż─żżżŲĪó┴„╩»ż╦ scheduling żŽżĘżŲżļż╬żŪżŽż╩żżż½ż╚(¼FĄžżŪ)ź│źßź¾ź╚ż“ĒöżŁż▐żĘż┐ĪŻ"╣╠Č©"żŽżóż»ż▐żŪ optimizer ż╬įÆżŪżóż├żŲĪóscheduling ż╩żĘż╬ Adam żŽżĮż│ż▐żŪėą─▄żŪżŽż╩żżż╚ż╬ż│ż╚ĪŻ

ż│żņżŽāWż╬ĮU“Yż╚żŌę╗ų┬żĘżŲżżż▐ż╣ĪŻGenerative ModelsŻ©źßź┐źĄ®`ź┘źż Ż®

Generative ModelsŻ©źßź┐źĄ®`ź┘źż Ż®cvpaper. challenge

?

cvpaper.challenge ż╬ źßź┐źĄ®`ź┘źż░k▒Ēź╣źķźżź╔żŪż╣ĪŻ

cvpaper.challengeżŽź│ź¾źįźÕ®`ź┐źėźĖźńź¾Ęųę░ż╬Į±ż“ė│żĘĪóź╚źņź¾ź╔ż“äōżĻ│÷ż╣╠¶æķżŪż╣ĪŻšō╬─źĄź▐źĻū„│╔?źóźżźŪźŻźó┐╝░Ė?ūhšō?īgū░?šō╬─═ČĖÕż╦╚ĪżĻĮMż▀ĪóĘ▓żµżļų¬ūRż“╣▓ėążĘż▐ż╣ĪŻ2020ż╬─┐ś╦żŽĪĖź╚ź├źū╗ßūh30+▒Š═ČĖÕĪ╣ż╣żļż│ż╚żŪż╣ĪŻ

http://xpaperchallenge.org/cv/ Į╠Ĥż╩żĘ╗ŁŽ±╠žÅš▒Ē¼Fč¦┴Ģż╬äėŽ“ {Un, Self} supervised representation learning (CVPR 2018 ═Ļ╚½šiŲŲ...

Į╠Ĥż╩żĘ╗ŁŽ±╠žÅš▒Ē¼Fč¦┴Ģż╬äėŽ“ {Un, Self} supervised representation learning (CVPR 2018 ═Ļ╚½šiŲŲ...cvpaper. challenge

?

CVPR 2018 ═Ļ╚½šiŲŲź┴źŃźņź¾źĖł¾Ėµ╗ß cvpaper.challenge ├ŃÅŖ╗ß@Wantedly░ūĮ╠©ź¬źšźŻź╣

cvpaper.challenge żŽź│ź¾źįźÕ®`ź┐źėźĖźńź¾Ęųę░ż╬Į±ż“ė│żĘĪóäōżĻ│÷ż╣╠¶æķżŪż╣ĪŻšō╬─šiŲŲ?ż▐ż╚żß?źóźżźŪźŻźó┐╝░Ė?ūhšō?īgū░?šō╬─ł╠╣PŻ©?╔ń╗ßīgū░Ż®ż╦ų┴żļż▐żŪÄ┌ż»╚ĪżĻĮMż▀Īóżóżķżµżļų¬ūRż“╣▓ėążĘżŲżżż▐ż╣ĪŻ

http://xpaperchallenge.org/cv/ĪŠDL▌åši╗ßĪ┐čįšZęį═ŌżŪż╬Transformerż╬ż▐ż╚żß (ViT, Perceiver, Frozen Pretrained Transformer etc)

ĪŠDL▌åši╗ßĪ┐čįšZęį═ŌżŪż╬Transformerż╬ż▐ż╚żß (ViT, Perceiver, Frozen Pretrained Transformer etc)Deep Learning JP

?

This document summarizes recent research on applying self-attention mechanisms from Transformers to domains other than language, such as computer vision. It discusses models that use self-attention for images, including ViT, DeiT, and T2T, which apply Transformers to divided image patches. It also covers more general attention modules like the Perceiver that aims to be domain-agnostic. Finally, it discusses work on transferring pretrained language Transformers to other modalities through frozen weights, showing they can function as universal computation engines.ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»╚ļ├┼

ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»╚ļ├┼ryosuke-kojima

?

https://math-coding.connpass.com/event/147508/ Math&coding#6 ż╬░k▒Ē┘Y┴Ž[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...Deep Learning JP

?

2019/08/16

Deep Learning JP:

http://deeplearning.jp/seminar-2/Positive-Unlabeled Learning with Non-Negative Risk Estimator

Positive-Unlabeled Learning with Non-Negative Risk EstimatorKiryo Ryuichi

?

▒Ę▒§▒╩│¦2017šiż▀╗ßż╬░k▒Ēū╩┴ŽżŪż╣ĪŻ[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based Policies](https://cdn.slidesharecdn.com/ss_thumbnails/dlhacks20170406-170407002545-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]Reinforcement Learning with Deep Energy-Based PoliciesDeep Learning JP

?

2017/4/7

Deep Learning JP:

http://deeplearning.jp/seminar-2/

SSII2020SS: ź░źķźšźŪ®`ź┐żŪżŌ╔Ņīėč¦┴Ģ ? Graph Neural Networks ╚ļķT ?

SSII2020SS: ź░źķźšźŪ®`ź┐żŪżŌ╔Ņīėč¦┴Ģ ? Graph Neural Networks ╚ļķT ?SSII

?

SSII2020 ╝╝ągäėŽ“ĮŌšhź╗ź├źĘźńź¾ SS1

6/11 (─Š) 14:00Ī½14:30ĪĪźßźżź¾╗ßł÷ (vimeo + sli.do)

ź░źķźšśŗįņż“żŌż─źŪ®`ź┐ż╦īØż╣żļ DNNĪóż╣ż╩ż’ż┴ Graph Neural Networks (GNNs) ż╬蹊┐żŽż│ż╬Ż▓ĪóŻ│─ĻżŪ▓╬╝ėż╣żļ蹊┐š▀ż¼╝▒ēłżĘżŲżżżļĪŻ¼Fū┤ĪóśöĪ®ż╩źó®`źŁźŲź»ź┴źŃż╬ GNN ż¼śöĪ®ż╩ź╔źßźżź¾żõśöĪ®ż╩ź┐ź╣ź»żŪéĆäeż╦╠ß░ĖżĄżņĪóĖ┼ėQż“ūĮż©żļż╬żŌ║åģgżŪżŽż╩żżū┤æBż╦ż╩ż├żŲżżżļĪŻ▒Šź┴źÕ®`ź╚źĻźóźļżŽĪóÄ┌╣Āż╦╔óżķżąż├ż┐ GNN 蹊┐ż╬¼Fū┤ż╦ż─żżżŲż╬Ė┼ėQż╚╗∙▒P╝╝ągż“ĮBĮķż╣żļż╚ż╚żŌż╦ĪóĢrķgż¼įSż╣╣ĀćņżŪź│ź¾źįźÕ®`ź┐źėźĖźńź¾ŅIė“ż╦ż¬ż▒żļÅĻė├└²ż╬ĮBĮķż╦żŌ╚ĪżĻĮMż▀ż┐żżĪŻ«Æż▀▐zż▀ź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╬Ė▀Š½Č╚╗»ż╚Ė▀╦┘╗»

«Æż▀▐zż▀ź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╬Ė▀Š½Č╚╗»ż╚Ė▀╦┘╗»Yusuke Uchida

?

2012─Ļż╬╗ŁŽ±šJūRź│ź¾ź┌źŲźŻźĘźńź¾ILSVRCż╦ż¬ż▒żļAlexNetż╬ĄŪł÷ęįĮĄŻ¼╗ŁŽ±šJūRż╦ż¬żżżŲżŽ«Æż▀▐zż▀ź╦źÕ®`źķźļź═ź├ź╚ź’®`ź» (CNN) ż“ė├żżżļż│ż╚ż¼źŪźšźĪź»ź╚ź╣ź┐ź¾ź└®`ź╔ż╚ż╩ż├ż┐Ż«CNNżŽ╗ŁŽ±ĘųŅÉż└ż▒żŪżŽż╩ż»Ż¼ź╗ź░źßź¾źŲ®`źĘźńź¾żõ╬’╠ÕŚ╩│÷ż╩ż╔śöĪ®ż╩ź┐ź╣ź»ż“ĮŌż»ż┐żßż╬ź┘®`ź╣ź═ź├ź╚ź’®`ź»ż╚żĘżŲżŌÄ┌ż»└¹ė├żĄżņżŲżŁżŲżżżļŻ«▒Šųvč▌żŪżŽŻ¼AlexNetęįĮĄż╬┤·▒ĒĄ─ż╩CNNż╬ēõ▀wż“š±żĻĘĄżļż╚ż╚żŌż╦Ż¼Į³─Ļ╠ß░ĖżĄżņżŲżżżļśöĪ®ż╩CNNż╬Ė─┴╝╩ųĘ©ż╦ż─żżżŲźĄ®`ź┘źżż“ąążżŻ¼żĮżņżķż“Äūż─ż½ż╬źóźūźĒ®`ź┴ż╦ĘųŅɿʯ¼ĮŌšhż╣żļŻ«Ė³ż╦Ż¼īgė├╔Žųžę¬ż╩Ė▀╦┘╗»╩ųĘ©ż╦ż─żżżŲĪó«Æż▀▐zż▀ż╬ĘųĮŌżõų”žūżĻĄ╚ż╬ĘųŅÉż“ąążżŻ¼żĮżņżŠżņĮŌšhż“ąąż”Ż«

Recent Advances in Convolutional Neural Networks and Accelerating DNNs

Ą┌21╗žź╣źŲźóźķź▄╚╦╣żų¬─▄ź╗ź▀ź╩®`ųvč▌┘Y┴Ž

https://stair.connpass.com/event/126556/ĪŠDL▌åši╗ßĪ┐GPT-4Technical Report

ĪŠDL▌åši╗ßĪ┐GPT-4Technical ReportDeep Learning JP

?

2023/4/28

Deep Learning JP

http://deeplearning.jp/seminar-2/

[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image Translation](https://cdn.slidesharecdn.com/ss_thumbnails/dlseminarfunit-190517005148-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]Few-Shot Unsupervised Image-to-Image TranslationDeep Learning JP

?

2019/05/17

Deep Learning JP:

http://deeplearning.jp/seminar-2/ ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╚ź░źķźšūķ║Žż╗╬╩╠Ō

ź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╚ź░źķźšūķ║Žż╗╬╩╠Ōjoisino

?

ęįŽ┬ż╬Č■ż─ż╬šō╬─ż╬ĮBĮķż“ųąą─ż╦Īóź░źķźšź╦źÕ®`źķźļź═ź├ź╚ź’®`ź»ż╚ź░źķźšūķ║Žż╗╬╩╠Ōż╬Į╗ż’żĻż╦ż─żżżŲĮŌšhżĘż▐żĘż┐ĪŻ SIG-FPAI żŪż╬šą┤²ųvč▌ż╬─┌╚▌ż╦╔┘żĘą▐š²ż“╝ėż©ż┐żŌż╬żŪż╣ĪŻ

* Learning Combinatorial Optimization Algorithm over Graphs (NIPS 2017)

* Approximation Ratios of Graph Neural Networks for Combinatorial Problems (NeurIPS 2019)[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...Deep Learning JP

?

2017/9/4

Deep Learning JP:

http://deeplearning.jp/seminar-2/░┐▒Ķ│┘Š▒│ŠŠ▒│·▒░∙╚ļ├┼Ż”ūŅą┬Č»Ž“

░┐▒Ķ│┘Š▒│ŠŠ▒│·▒░∙╚ļ├┼Ż”ūŅą┬Č»Ž“Motokawa Tetsuya

?

4/16ż╦ąąż’żņż┐ų■▓©┤¾ż╬╩ųēV╚¶┴ųčą║Ž═¼ź╝ź▀żŪż╬░k▒Ēź╣źķźżź╔żŪż╣ĪŻ[DL▌åši╗ß] Residual Attention Network for Image Classification![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Residual Attention Network for Image Classification](https://cdn.slidesharecdn.com/ss_thumbnails/residualattentionnetworkforimageclassification-170907072057-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß] Residual Attention Network for Image ClassificationDeep Learning JP

?

2017/9/4

Deep Learning JP:

http://deeplearning.jp/seminar-2/Į╠Ĥż╩żĘ╗ŁŽ±╠žÅš▒Ē¼Fč¦┴Ģż╬äėŽ“ {Un, Self} supervised representation learning (CVPR 2018 ═Ļ╚½šiŲŲ...

Į╠Ĥż╩żĘ╗ŁŽ±╠žÅš▒Ē¼Fč¦┴Ģż╬äėŽ“ {Un, Self} supervised representation learning (CVPR 2018 ═Ļ╚½šiŲŲ...cvpaper. challenge

?

ĪŠDL▌åši╗ßĪ┐čįšZęį═ŌżŪż╬Transformerż╬ż▐ż╚żß (ViT, Perceiver, Frozen Pretrained Transformer etc)

ĪŠDL▌åši╗ßĪ┐čįšZęį═ŌżŪż╬Transformerż╬ż▐ż╚żß (ViT, Perceiver, Frozen Pretrained Transformer etc)Deep Learning JP

?

[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...](https://cdn.slidesharecdn.com/ss_thumbnails/20190816-190816001737-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß]SOLAR: Deep Structured Representations for Model-Based Reinforcement L...Deep Learning JP

?

[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

![[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...](https://cdn.slidesharecdn.com/ss_thumbnails/dlhackspectralnorm1-170907072536-thumbnail.jpg?width=560&fit=bounds)

[DL▌åši╗ß] Spectral Norm Regularization for Improving the Generalizability of De...Deep Learning JP

?

Similar to Forward-Forward Algorithm (20)

Imagination-Augmented Agents for Deep Reinforcement Learning

Imagination-Augmented Agents for Deep Reinforcement Learning?? ?

?

I will introduce a paper about I2A architecture made by deepmind. That is about Imagination-Augmented Agents for Deep Reinforcement Learning

This slide were presented at Deep Learning Study group in DAVIAN LAB.

Paper link: https://arxiv.org/abs/1707.06203Nationality recognition

Nationality recognition?? ?

?

2019 AIU ?????? ??? ?? ?? ???? ?? ???????. ???? ?? ?? ?? ???? ??? ?? ??? ?? ?? ??? ?? ???? ?? ?????.Coursera Machine Learning (by Andrew Ng)_????

Coursera Machine Learning (by Andrew Ng)_????SANG WON PARK

?

??? ???? ???? ??, ?? ?? ? ?????? ???? ??? ??? ??? ????? ???? ???? ??.

?? week1 ~ week4 ??? ??? ???? "??? ?? ???"?? ?? ???? ???? ? ?? ????, ???? ??? ??? ???? ?? ??? ? ?? ????? ???? ??.

?? ??? ??? ???? ????? ????? ????, ? ???? ??? ???? ???? ??. ?? Octave code? ??? ????? ???? ??? ????? ? ??.

Week1

Linear Regression with One Variable

Linear Algebra - review

Week2

Linear Regression with Multiple Variables

Octave[incomplete]

Week3

Logistic Regression

Regularization

Week4

Neural Networks - Representation

Week5

Neural Networks - Learning

Week6

Advice for applying machine learning techniques

Machine Learning System Design

Week7

Support Vector Machines

Week8

Unsupervised Learning(Clustering)

Dimensionality Reduction

Week9

Anomaly Detection

Recommender Systems

Week10

Large Scale Machine Learning

Week11

Application Example - Photo OCR[paper review] ??? - Eye in the sky & 3D human pose estimation in video with ...![[paper review] ??? - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ??? - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ??? - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

![[paper review] ??? - Eye in the sky & 3D human pose estimation in video with ...](https://cdn.slidesharecdn.com/ss_thumbnails/190321eyeposegyubin-190517100712-thumbnail.jpg?width=560&fit=bounds)

[paper review] ??? - Eye in the sky & 3D human pose estimation in video with ...Gyubin Son

?

1. Eye in the Sky: Real-time Drone Surveillance System (DSS) for Violent Individuals Identification using ScatterNet Hybrid Deep Learning Network

https://arxiv.org/abs/1806.00746

2. 3D human pose estimation in video with temporal convolutions and semi-supervised training

https://arxiv.org/abs/1811.11742[Paper review] neural production system![[Paper review] neural production system](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewneuralproductionsystem-210331205726-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] neural production system](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewneuralproductionsystem-210331205726-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] neural production system](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewneuralproductionsystem-210331205726-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] neural production system](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewneuralproductionsystem-210331205726-thumbnail.jpg?width=560&fit=bounds)

[Paper review] neural production systemSeonghoon Jung

?

Paper Review of DeepMind's Neural Production System

https://arxiv.org/abs/2103.01937Densely Connected Convolutional Networks

Densely Connected Convolutional NetworksOh Yoojin

?

HUANG, Gao, et al. In:?Proceedings of the IEEE conference on computer vision and pattern recognition.Workshop 210417 dhlee

Workshop 210417 dhleeDongheon Lee

?

??? ????? ???? 1? ??? (2021. 04. 17)

Introduction to Deep Learning & Machine Learning with Practical Exercise???? ??? ??: Rainbow ???? ???? (2nd dlcat in Daejeon)

???? ??? ??: Rainbow ???? ???? (2nd dlcat in Daejeon)Kyunghwan Kim

?

2nd ???? ??? ????(2nd DLCAT)?? ??? "???? ??? ??: Rainbow ???? ????" ????More from Dong Heon Cho (20)

What is Texture.pdf

What is Texture.pdfDong Heon Cho

?

The document discusses texture analysis in computer vision. It begins by asking what texture is and whether objects themselves can be considered textures. It then outlines several statistical and Fourier approaches to texture analysis, citing specific papers on texture energy measures, texton theory, and using textons to model materials. Deep convolutional neural networks are also discussed as being able to recognize and describe texture through learned filter banks. The concept of texels is introduced as low-level features that make up texture at different scales from edges to shapes. The document hypothesizes that CNNs are sensitive to texture because texture repeats across images while object shapes do not, and that CNNs act as texture mappers rather than template matchers. It also questions whether primary visual cortexBADGE

BADGEDong Heon Cho

?

This document discusses active learning techniques called Deep Badge Active Learning. It proposes using gradient embeddings to represent samples and k-means++ initialization for sample selection. Specifically, it uses the gradient embedding for feature representation, then performs k-means++ initialization to select samples by finding those with the maximum 2-norm and those farthest from existing samples, adding them to the set iteratively. This aims to select a diverse set of samples, similar to how binary search works. The technique could improve over entropy-based and core-set selection approaches for active learning with convolutional neural networks.Neural Radiance Field

Neural Radiance FieldDong Heon Cho

?

Neural Radiance Fields (NeRF) represent scenes as neural networks that map 5D input (3D position and 2D viewing direction) to a 4D output (RGB color and opacity). NeRF uses an MLP that is trained to predict volumetric density and color for a scene from many camera views. Key aspects of NeRF include using positional encodings as inputs to help model view-dependent effects, and training to optimize for integrated color and density values along camera rays. NeRF has enabled novel applications beyond novel view synthesis, including pose estimation, dense descriptors, and self-supervised segmentation.2020 > Self supervised learning

2020 > Self supervised learningDong Heon Cho

?

Self Supervised Learning after 2020

SimCLR, MOCO, BYOL and etc

Start from SimCLRAll about that pooling

All about that poolingDong Heon Cho

?

The document discusses various pooling operations used in image processing and convolutional neural networks (CNNs). It provides an overview of common pooling methods like max pooling, average pooling, and spatial pyramid pooling. It also discusses more advanced and trainable pooling techniques like stochastic pooling, mixed/gated pooling, fractional pooling, local importance pooling, and global feature guided local pooling. The document analyzes the tradeoffs of different pooling methods and how they can balance preserving details versus achieving invariance to changes in position or lighting. It references several influential papers that analyzed properties of pooling operations.Background elimination review

Background elimination reviewDong Heon Cho

?

This document discusses background elimination techniques which involve three main steps: object detection to select the target, segmentation to isolate the target from the background, and refinement to improve the quality of the segmented mask. It provides an overview of approaches that have been used for each step, including early methods based on SVM and more recent deep learning-based techniques like Mask R-CNN that integrate detection and segmentation. The document also notes that segmentation is challenging without object detection cues and discusses types of segmentation as well as refinement methods that use transformations, dimension reduction, and graph-based modeling.Transparent Latent GAN

Transparent Latent GANDong Heon Cho

?

1. TL-GAN matches feature axes in the latent space to generate images without fine-tuning the neural network.

2. It discovers correlations between the latent vector Z and image labels by applying multivariate linear regression and normalizing the coefficients.

3. The vectors are then adjusted to be orthogonal, allowing different properties to be matched while labeling unlabeled data to add descriptions.Image matting atoc

Image matting atocDong Heon Cho

?

Image matting is the process of separating the foreground and background of an image by assigning each pixel an alpha value between 0 and 1 indicating its transparency. Traditionally, matting uses a trimap to classify pixels as foreground, background, or uncertain. Early sampling-based methods calculated alpha values based on feature distances of closest foreground and background pixels. More recent approaches use deep learning, where the first deep learning matting method in 2016 took local and non-local information as input, and the 2017 Deep Image Matting method used an RGB image and trimap as input in a fully deep learning framework.Multi object Deep reinforcement learning

Multi object Deep reinforcement learningDong Heon Cho

?

This document discusses multi-objective reinforcement learning and introduces Deep OLS Learning, which combines multi-objective learning with deep Q-networks. It presents Deep OLS Learning with Partial Reuse and Full Reuse to handle multi-objective Markov decision processes by finding a convergence set of policies that optimize multiple conflicting objectives, such as maximizing server performance while minimizing power consumption. The approach is evaluated on multi-objective versions of mountain car and deep sea treasure problems.Multi agent reinforcement learning for sequential social dilemmas

Multi agent reinforcement learning for sequential social dilemmasDong Heon Cho

?

This document summarizes research on multi-agent reinforcement learning in sequential social dilemmas. It discusses how sequential social dilemmas extend traditional matrix games by adding temporal aspects like partial observability. Simulation experiments are described where agents learn cooperative or defective policies for tasks like fruit gathering and wolfpack hunting in a partially observable environment. The agents' learned policies are then used to construct an empirical payoff matrix to analyze whether cooperation or defection is rewarded more, relating the multi-agent reinforcement learning results back to classic social dilemmas.Multi agent System

Multi agent SystemDong Heon Cho

?

This document discusses multi-agent systems and their applications. It provides examples of multi-agent systems for spacecraft control, manufacturing scheduling, and more. Key points:

- Multi-agent systems consist of interacting intelligent agents that can cooperate, coordinate, and negotiate to achieve goals. They offer benefits like robustness, scalability, and reusability.

- Challenges include defining global goals from local actions and incentivizing cooperation. Games like the prisoner's dilemma model social dilemmas around cooperation versus defection.

- The document outlines architectures like the blackboard model and BDI (belief-desire-intention) model. It also provides a manufacturing example using the JADE platform.Hybrid reward architecture

Hybrid reward architectureDong Heon Cho

?

The document discusses Hybrid Reward Architecture (HRA), a reinforcement learning method that decomposes the reward function of an environment into multiple sub-reward functions. In HRA, each sub-reward function is learned by a separate agent using DQN. This allows HRA to learn complex reward functions more quickly and stably compared to using a single reward signal. An experiment is described where HRA learns to eat 5 randomly placed fruits in an environment over 300 steps more effectively than a standard DQN agent.Use Jupyter notebook guide in 5 minutes

Use Jupyter notebook guide in 5 minutesDong Heon Cho

?

Tutorial of Jupyter notebook in a fast way.

Introduce what is notebook and simple shortcuts

- command mode, edit modeAlexNet and so on...

AlexNet and so on...Dong Heon Cho

?

AlexNet 2012 Contributions and Structure with Simple keras Code.

Multi GPU, Pooling, Data Augmentation...Deep Learning AtoC with Image Perspective

Deep Learning AtoC with Image PerspectiveDong Heon Cho

?

Deep learning models like CNNs, RNNs, and GANs are widely used for image classification and computer vision tasks. CNNs are commonly used for tasks like classification, detection, segmentation through learning hierarchical image features. Fully convolutional networks with encoder-decoder architectures like SegNet and Mask R-CNN can perform pixel-level semantic segmentation and instance segmentation by combining classification and bounding box detection. Deep learning has achieved state-of-the-art performance on many image applications due to its ability to learn powerful visual representations from large datasets.LOL win prediction

LOL win predictionDong Heon Cho

?

League of legend win prediction on master 1 tier.

A pipeline of data analytics and how to improve results.How can we train with few data

How can we train with few dataDong Heon Cho

?

The document discusses approaches for using deep learning with small datasets, including transfer learning techniques like fine-tuning pre-trained models, multi-task learning, and metric learning approaches for few-shot and zero-shot learning problems. It also covers domain adaptation techniques when labels are not available, as well as anomaly detection for skewed label distributions. Traditional models like SVM are suggested as initial approaches, with deep learning techniques applied if those are not satisfactory.Domain adaptation gan

Domain adaptation ganDong Heon Cho

?

The document discusses domain adaptation and transfer learning techniques in deep learning such as feature extraction, fine tuning, and parameter sharing. It specifically describes domain-adversarial neural networks which aim to make the source and target feature distributions indistinguishable and domain separation networks which extract domain-invariant and private features to model each domain separately.Dense sparse-dense training for dnn and Other Models

Dense sparse-dense training for dnn and Other ModelsDong Heon Cho

?

Deep Learning Training methods & Squeezing methodsSqueeeze models

Squeeeze modelsDong Heon Cho

?

This document discusses various techniques for compressing and speeding up deep neural networks, including singular value decomposition, pruning, and SqueezeNet. Singular value decomposition can be used to compress fully connected layers by minimizing the difference between the original weight matrix and its low-rank approximation. Pruning techniques remove unimportant weights below a threshold. SqueezeNet is highlighted as designing a small CNN architecture from the start that achieves AlexNet-level accuracy with 50x fewer parameters and less than 0.5MB in size.Forward-Forward Algorithm

- 1. What is wrong with backpropagation The Forward-Forward Algorithm: Some Preliminary Investigations Neurips 2022

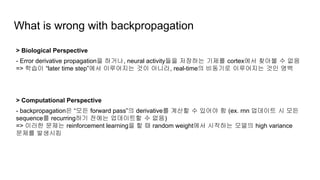

- 2. What is wrong with backpropagation > Biological Perspective - Error derivative propagation? ???, neural activity?? ???? ??? cortex?? ??? ? ?? => ??? Ī░later time stepĪ▒?? ????? ?? ???, real-time? ???? ????? ?? ?? > Computational Perspective - backpropagation? Ī░?? forward passĪ▒? derivative? ??? ? ??? ? (ex. rnn ???? ? ?? sequence? recurring?? ??? ????? ? ??) => ??? ??? reinforcement learning? ? ? random weight?? ???? ??? high variance ??? ????

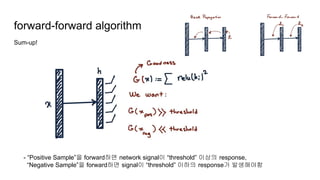

- 3. forward-forward algorithm - Blotzmann machine? ??? greedy multi-layer learning procedure Forward-Forward algorithm ?? ?? 1. Goodness function for one layer: Weight? ????? ? ??? objective function 2. Forward (Positive), Forward (Negative): Network procedure

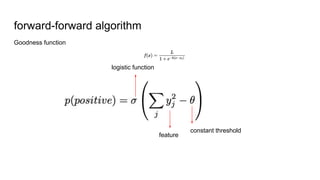

- 4. forward-forward algorithm Goodness function constant threshold feature logistic function

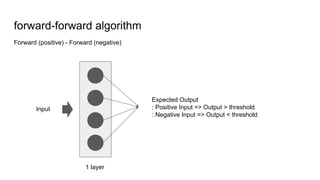

- 5. forward-forward algorithm Forward (positive) - Forward (negative) 1 layer Expected Output : Positive Input => Output > threshold : Negative Input => Output < threshold Input

- 6. forward-forward algorithm Sum-up! - Ī░Positive SampleĪ▒? forward?? network signal? Ī░thresholdĪ▒ ??? response, Ī░Negative SampleĪ▒? forward?? signal? Ī░thresholdĪ▒ ??? response? ?????

- 7. Negative data for Forward-Forward Unsupervised Task 1. Create Ī░RandomĪ▒ Binary Mask 2. ?? ???? ??? ??? Mask? ?? ?? sum => Negative Data? ???? Ī░characterize shapeĪ▒? ???? ?? ??

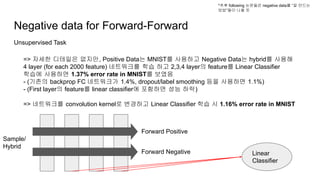

- 8. Negative data for Forward-Forward Unsupervised Task => ??? ???? ???, Positive Data? MNIST? ???? Negative Data? hybrid? ??? 4 layer (for each 2000 feature) ????? ?? ?? 2,3,4 layer? feature? Linear Classifier ??? ???? 1.37% error rate in MNIST? ??? - (??? backprop FC ????? 1.4%, dropout/label smoothing ?? ???? 1.1%) - (First layer? feature? linear classifier? ???? ?? ??) => ????? convolution kernel? ???? Linear Classifier ?? ? 1.16% error rate in MNIST *?? following ???? negative data? Ī░? ??? ??Ī▒?? ?? ? Forward Positive Forward Negative Linear Classifier Sample/ Hybrid

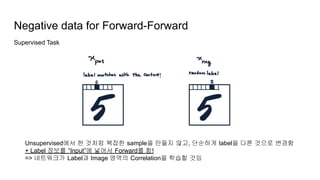

- 9. Negative data for Forward-Forward Supervised Task Unsupervised?? ? ??? ??? sample? ??? ??, ???? label? ?? ??? ??? + Label ??? Ī░InputĪ▒? ??? Forward? ?! => ????? Label? Image ??? Correlation? ??? ??

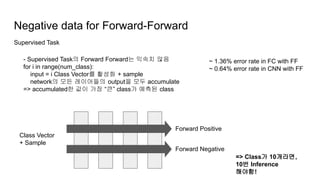

- 10. Negative data for Forward-Forward Supervised Task - Supervised Task? Forward Forward? ??? ?? for i in range(num_class): input = i Class Vector? ??? + sample network? ?? ????? output? ?? accumulate => accumulated? ?? ?? Ī░?Ī▒ class? ??? class Forward Positive Forward Negative Class Vector + Sample => Class? 10???, 10? Inference ???! ~ 1.36% error rate in FC with FF ~ 0.64% error rate in CNN with FF

- 11. Exp in CIFAR 10 *Network: 3 layer with 3072 ReLU each - Compute goodness for every label: ? ????? Input vector? ???? inference (supervised task) - one-pass softmax: (unsupervised task) => min/max ssq? goodness function? minimize, maximize? ?? == network output? Ī░thresholdĪ▒ ??? ?? ???, ?? ?? ??? => BP? Ī░overfittingĪ▒?? ???? ??

- 12. Pros & Cons - Pros ~ Backprop? ???? Full derivatives? ???? ???? ?????, forward-forward? ??? ?? ??? ?? ??? ???? ??? ~ Trillion? ????? ???? ???? ??? watts? ????, forward-forward? Ī░mortal computationĪ▒??? ???? ???? ?? (* hardware efficiency? ???? ?) - Cons ~ ?? backpropagation?? ??? ???, generalize ??? ??? ???? ?? (backprop ??? ???) ~ Big Model? Big Data? backpropagation? ?? ? (??? ?? ?????? ???? ? ?? ???ĪŁ?)

- 13. Future Works - Negative Forward? Positive Forward?? ?? - Negative forward ?? Positive Forward? ???? - Goodness function? ?? ??? ??? ??? - ReLU ?? activation function? ??????? ???? (t-distribution ?) - Forward-Forward? ?? ???? - ĪŁ

![[Tf2017] day4 jwkang_pub](https://cdn.slidesharecdn.com/ss_thumbnails/tf2017day4jwkangpub-171028122727-thumbnail.jpg?width=560&fit=bounds)